Professors’ AI twins loosen schedules, boost grades

Busy educators at several large institutions have in recent months begun using “digital twins,” powered by generative artificial intelligence platforms, to field questions from their students. One company involved in the deployments told EdScoop the professors are seeing leaps in engagement with the technology, with 75% of their students asking questions about coursework and assignments, compared to a 14% engagement rate found with traditional chatbots.

David Clarke, the founder and chief executive of Praxis AI, said his company’s software, which uses Anthropic’s Claude models as its engine, is being used at Clemson University, Alabama State University and the American Indian Higher Education Consortium, which includes 38 tribal colleges and universities. A key benefit of the technology, he said, has been that the twins provide a way for faculty and teaching assistants to field a great bulk of basic questions off-hours, leading to more substantive conversations in person.

“They said the majority of their questions now are about the subject matter, are complicated, because all of the lower end logistical questions are being handled by the AI,” Clarke said.

Praxis, which has a business partnership with Instructure, the company behind the learning management system Canvas, integrates with universities’ learning management systems to “meet students where they are,” Clarke said. To train their twins, professors first answer a 30-question personality and behavior assessment. Some also submit videos and other course materials, all of which goes through an algorithm that generates instructions for Claude to interact with students in a personalized fashion.

“If you have all the right configurations in place and it’s integrated with all the course material, the students feel like they’re getting as good an answer as maybe they get from the faculty. And they’re getting it when they need it,” Clarke said, noting that one social sciences class receives 2,000 questions per week, and that 90% of questions are asked off-hours.

In speaking with professors, Clarke said he’s learned that students are asking the same questions repeatedly — about dates, assignment requirements and other basic information that’s usually found in frequently asked question pages or handouts distributed on the first day of class. But students find it more convenient to have a tool they can simply ask questions, rather than needing to hunt down the information themselves, Clarke said.

“Students don’t have to navigate Canvas anymore, they don’t have to go to static pages, they don’t have to read FAQs,” he said. “They just ask the twin and it goes and does that for them.”

Drew Bent, a technical staff member at Anthropic, a company that has put safety and ethics near the center of its business model, said that working with companies like Praxis and universities is important for Anthropic’s mission.

“AI has tremendous potential in terms of helping overworked professors, providing support to students, closing equity gaps,” Bent said. “But I think it’s important to call out there are significant concerns. There are privacy concerns, when we talk to faculty there are concerns around cheating. Even students have expressed concerns of AI leading to brain rot.”

Bent said Anthropic’s AI models use “strong classifiers and other technologies” to help protect users in education from various privacy, cheating and content concerns. Clarke said his middleware also allows professors to audit the responses their twins are giving students, and continually provide new feedback and data sources to train for better responses. He said there are also safeguards to protect intellectual property.

“There’s multiple layers of training personalization guardrail security that make sure the answers the students are getting are correct and that any information that the faculty put into the system is locked in an IP vault and never shared with the [large language model]. So it’s highly secure,” Clarke said.

Clarke said that he launched his digital twin platform with OpenAI’s ChatGPT models last April, but switched to Anthropic in August because he better liked its personality, guardrails and ability to follow instructions.

The company has put a lot of effort into crafting Claude’s personality, Bent said.

“So much of the conversation around AI is how can we build the most intelligent models, IQ,” he said. “But we think a lot about the EQ as well. How do we build a model that can understand the user and in this case the student. They may be stressed about an exam the next day. So we have invested a lot in the character of Claude.”

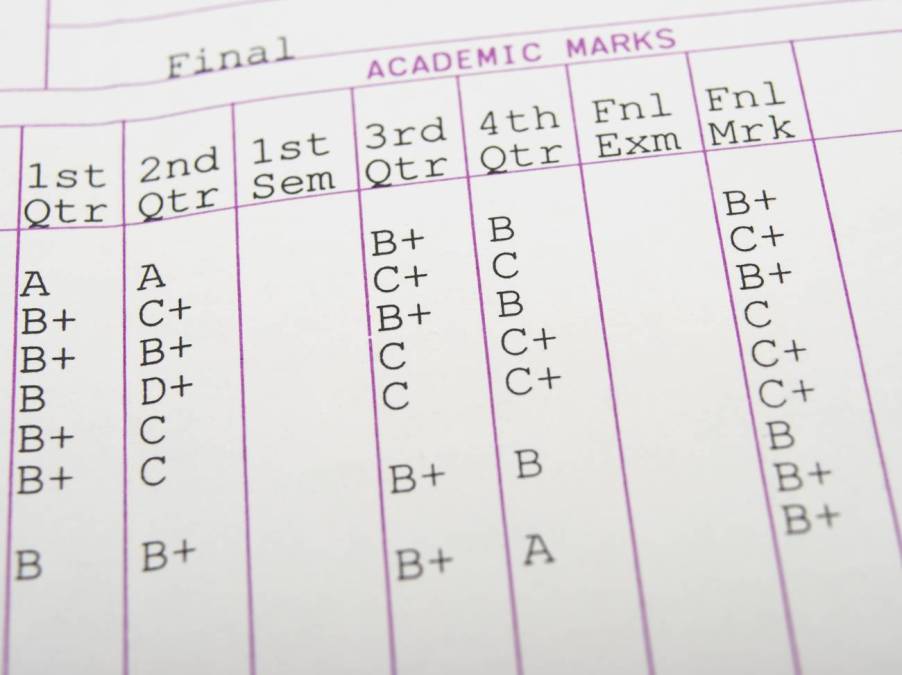

Clarke said his preference for Claude appears to be paying off, both in heightened engagement and educational outcomes. Some classes, he said, are seeing an average full letter grade improvement — from C to B — after adopting digital twins.