New GPTZero feature checks sources for AI content to repel ‘second-hand hallucinations’

As part of its campaign to prevent the internet from drowning in AI slop, the artificial intelligence detection company GPTZero on Monday announced a new tool that reveals the legitimacy of sources cited in the text it scans.

GPTZero Sources is a new component of the AI-detection tool that allows users to share bodies of text, see where factual claims are being made and receive evaluations of whether those claims are supported or contradicted by other sources. It goes a step further, running AI detection against the text of cited source documents — an intended bulwark against the internet’s rising sea of AI-generated content.

Company founder Edward Tian said Sources is not a fact-checker, because it doesn’t attempt to validate truth, but an automated method of detecting citations that point to fraudulent, contradictory or imaginary sources, all increasingly common objects thanks to the fanciful AI models growing in popularity across the internet.

“The biggest phenomenon that’s happening is students are citing things that don’t exist and AI is terrible at citing anything,” Tian said. “That’s a huge problem for teachers because now they’re receiving a lot of content that they’re unsure of how credible [the sources] are.”

Tian said he heard from many teachers who wanted a tool to aid in the tedious task of examining the citations in their students’ papers. Several recent studies have shown what teachers already knew: Many students are using generative AI, in many cases to write their essays. One 2023 survey of college students revealed that 30% used AI during homework sessions.

Many educators are encouraging the use of AI for schoolwork because it can serve as a research tool and learning aid, but mindlessly using AI can easily lead students to erroneous information. Academic research shows that generative AI models hallucinate frequently, with rates as high as 40% for more advanced tasks. And while the latest AI models have managed to cut the rate of hallucinations to under 10%, it’s still not unusual for a model like OpenAI’s GPT-4 to confabulate statistics, laws, organizations or detailed historical events.

Tian said the new source scanner uses a bank of 220 million (real) scholarly articles and runs AI-detection on the sources found in inputted text to reduce “second-hand hallucinations,” the practice of citing websites and articles that contain or reference AI-generated content.

“We’re keeping the internet clean, starting with its sources,” he said. “We’re the first to run an AI detection on every source, not just the article, to avoid this world where everyone’s citing AI jargon and spam.”

AI slop, the mass of banal software-generated creations flooding the internet, has many concerned, including Google, which relies on being able to identify high-quality content to support the business model of its search engine, and the AI companies themselves, which need training data that hasn’t been polluted by their own effluvia.

Analyses of the publishing platform Medium, commissioned by Wired last year, found that as much as 47% of posts were AI-generated, compared to just 3.8% in 2018. While Medium’s AI problem appears to be especially pronounced, other platforms are also becoming infested with low-quality articles, books and social media posts crafted by AI.

The trend lends new credibility to the Dead Internet Theory, a semi-facetious description of the internet which holds that the web is mainly bots creating content for the enjoyment of other bots.

Google in 2023 said it will continue rewarding high-quality content, regardless of how it’s produced, citing its E-E-A-T — or expertise, experience, authoritativeness and trustworthiness — model of evaluating websites. Some claim that these quality standards categorically exclude AI-generated content from the highest search rankings. Yet Google itself last year began using AI to pilfer websites for content to generate summaries to search queries, to the chagrin of publishers who subsequently saw dips in their web traffic.

AI companies are attempting to reduce how often their models hallucinate through the use of retrieval augmented generation, the technical term for when tools like Perplexity or ChatGPT search the internet in an attempt to boost the factual accuracy of their outputs. Tian said RAG is often ineffective, though, because so many websites contain dubious information.

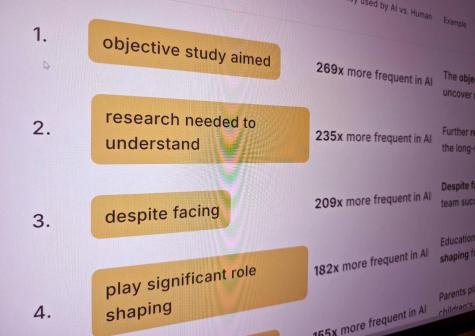

“First of all, the majority of users that use AI in the world are not using some of these more premium models,” he said. “They’re just using the basic version of ChatGPT that spams and hallucinates, so there still needs to be something in the layer after a source is generated, which was the goal of GPTZero. I think this is going to be more and more important as the internet is just filled with AI slop and AI jargon.”